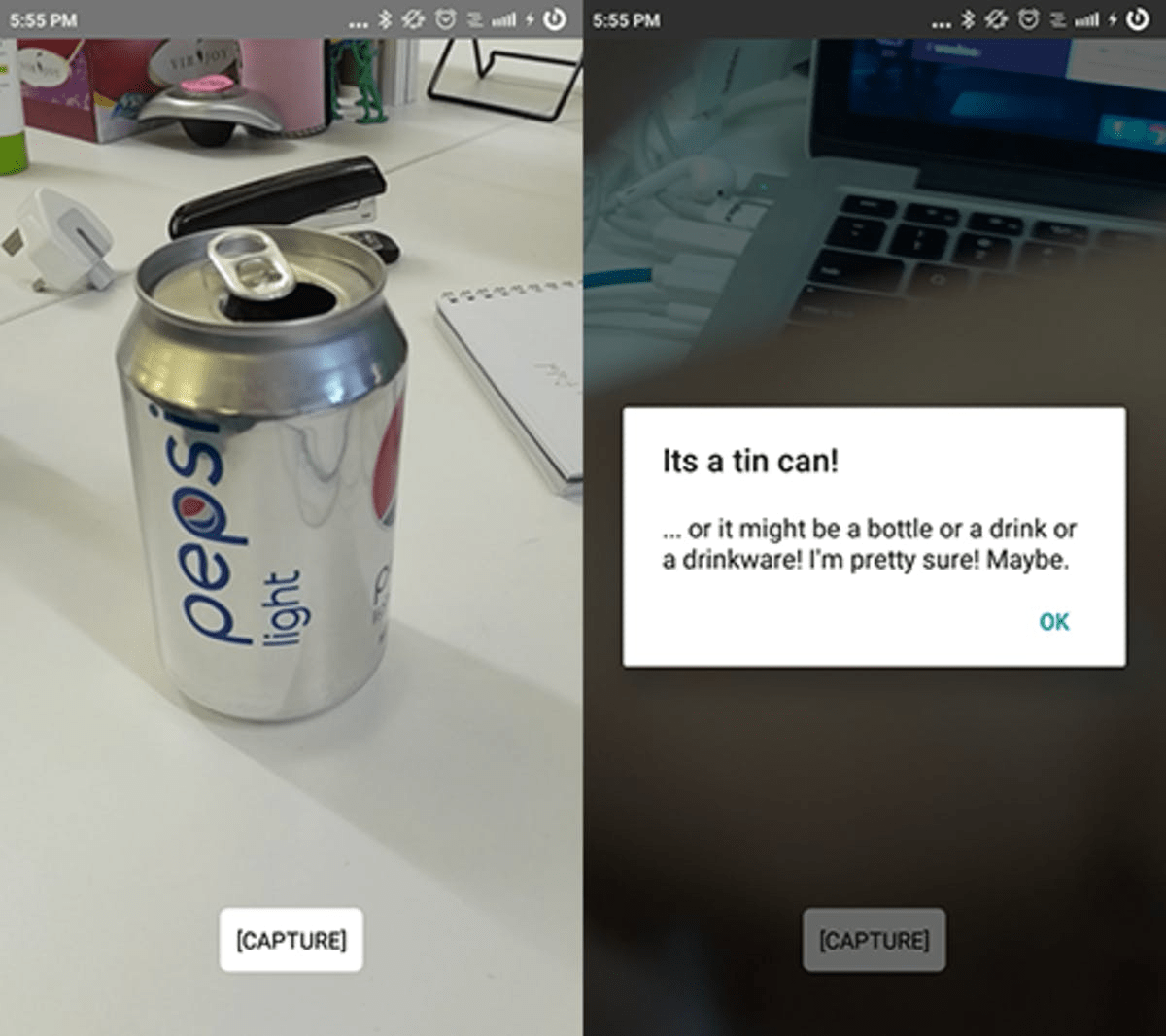

With all the craze on building Seefood joke apps like the hot dog recognition apps that got a hit recently, I'd thought it would be fun to try my hand in it as well. I’ve had my eye on the Google Cloud Vision API for some time now, just never had the motivation to build something with it. So I figured with my free time I’d take the opportunity to learn React Native to quickly build a simple image recognition app.

Humility is important

First and foremost, to build the camera app i used React Native. Its a pretty cool concept, with a component having its own CSS and JS as well as the DOM. It binds into the native Android/IOS API so it basically functions like a native app. I like boilerplate generators so that i can quickly learn the side tools that people develop with (flow, eslint, expo, etc) so i used create-react-native-app to generate the project for me.

After familiarising myself with the generated project i begin building the camera screen. I like how snapchat just has a full screen camera so i picked react-native-camera. Then i figured that sending the full huge ass camera image can’t be a good idea so react-native-image-resizer was pulled in to do the resizing job. Its looking like this will be as easy as tying a bunch of components together.

Registering for the Google Vision API meant going to the webapp page/console and generating an API key. After getting that, you could basically run a RESTful API call with it and send in the image from the device in base64 format(as you may have guessed, thats another component package).

This image returns me this JSON object. Pretty cool and accurate!

From here on out, you should probably have a threshold on the confidence/score to maintain a higher standard on guesses. A good measuring stick is that they say humans have a 0.95 success rate on recognition challenges. So, put it at 0.8 or something. Meaning that you only tell the user what it is if the score is 0.8 and above. After that I replace the capture button with a circle and added in an animation while it determines the image.

Take a pic > resize > convert to base64 > pass it through the API > filter results > display results. Total dev time? A few hours if you know what you’re doing (i didn’t).

Source can be found here: https://github.com/dividezero/whattheheckisthis

Recent posts